Astonishingly Poor Empirics, Cockshott Edition

“When a statistician criticizes a claim on technical grounds, he or she is declaring not that the original finding is wrong but that it has not been convincingly proven.”

— Andrew Gelman and Kaiser Fung

I’ve been meaning to post again about the statistical evidence for the labour theory of value. However, this debate is not top of my priorities because (1) it feels pretty fruitless given the dynamics of internet debates; (2) Marxist economics, especially the version of it we will discuss in this post, is not massively important given our current political situation; (3) unlike other things I could be doing, going over all of this does not yield me any revenue, and as I am self-employed I need to allocate my time efficiently. Something something self exploitation, but also something something people respond to incentives. Pick your theory.

What’s fascinating about quantitative Marxism online, which seems to draw heavily from the work of people like Michael Roberts and Paul Cockshott, is how elementary the statistical errors they are committing are. It can be frustrating to argue with people who simply conflate correlation and causation — then double down when you point this out — but it also gives us an opportunity to revisit the basics. We can ask why statisticians and econometricians don’t do things this way, and this often leads to new insights that we previously took for granted. I’ve discussed why we can’t eyeball trend lines of the rate of profit to test the labour theory of value before, now I want to discuss why we can’t use correlations between labour hours and output.

Cockshott’s Formulation

Paul Cockshott expresses his view most concisely in a video aptly titled ‘Why labour theory of value is right’. He takes something of a positivist approach here, employing the Popperian notion of falsifiability, and I will follow his lead — with one crucial caveat to come. In particular, Cockshott states that the neoclassical demand and supply theory is unfalsifiable because there is only one data point (a price and quantity at which a commodity is sold) despite there being two unknown equations (demand as well as supply). So far, so good — demand and supply ain’t my favourite model.

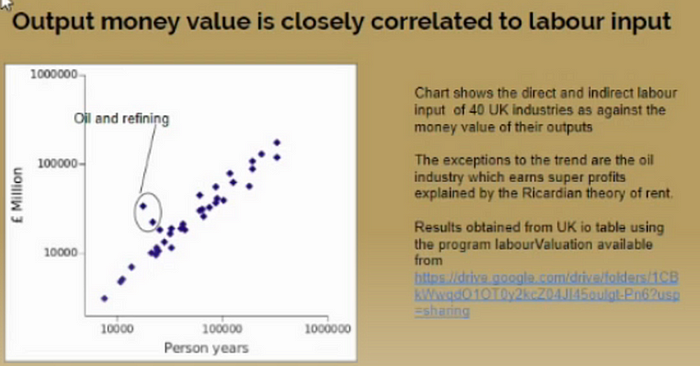

He then argues that the labour theory of value, in contrast, is falsifiable because it predicts that labour inputs (measured by the number of years worked by employees) in a given industry should be closely correlated with the output of that industry. He takes ‘person-years’ within industries and correlates them with the output of that industry. Sure enough, there seems to be a high correlation (statistically, it is frequently above 0.9, uncommonly large):

What’s missing from Cockshott’s notion of falsifiability, in my opinion, is the notion of other plausible explanations. We can technically be able to test a theory, like the LTV, while still having many other plausible explanations for what we see. In this instance, the test is no longer a unique test of the theory. If the theory fails this test, it is falsified, so it’s better than not having a test at all. But if it passes, that cannot be said to confirm the theory. This is a close cousin of what Cockshott himself says: if there are too many unknowns compared to knowns, then literally any possibility is consistent with the theory. In the case of demand and supply, we actually can’t test the theory statistically — the numbers won’t run when it’s not identified (although there are ways round this that Cockshott ignores). With the LTV, we can, but I’d describe it as a weak test at best.

Like so many things, the phrase ‘correlation isn’t causation’ has been ruined by the internet. To avoid just becoming a correlation versus causation guy, those who say it should realise what they are really saying is ‘there are other plausible explanations for the correlation we see’. If you study quantitative social science to a high level, you will find that nobody in seminars says ‘you’re confusing correlation with causation’; they come up with reasons that your estimation could have other plausible explanations. For example, is the positive correlation between education and wages because education increases wages, or because kids with more motivation and higher ability get more education? Is it because family background gets you more education and a better job? The correlation has other explanations, which is why we don’t believe it, but being specific is better than being a Reddit monkey.

(Of course, sometimes correlations are enough — if you told me that more food at the orphanage was correlated with the adult heights of the orphans, I wouldn’t demand a randomised control trial as proof we need more food for the orphans. However, with a theory as complex and contentious as the labour theory of value, we need to apply a higher standard. You are, after all, telling me that you have basically solved the economic problem by figuring out the underlying dynamics of capitalism. Then giving me a correlation. So: what other theories of capitalism could explain this correlation?)

Unfortunately for Cockshott, the answer is ‘literally any theory of how capitalism works’. Blair Fix has a well-circulated post in which he argues that the correlation between labour hours and output is just an artefact of industry size. Bigger industries employ more labourers and also have higher output: this is obvious. Bichler and Nitzan (BN) have a widely available spreadsheet where they show that generating a set of cases where individual labour inputs are completely unrelated to prices still produces the industry-level correlation. Cockshott himself says that the labour theory of value states that “the average price of a good will be proportional to the average amount of labour used to make it.” Yet in BN’s example, this is not the case yet we still see the main correlation appealed to by Cockshott. This is the worst case scenario: our correlation is spurious, in that we are essentially correlating something (in this case quantity produced) with itself. It is stretching the definition of the word to consider this test an example of falsifiability.

As an analogy, consider making a cake. You could put forward a ‘flour theory of cake’ and show that the volume of flour does indeed correlate well with the eventual size of the cake, then take that as evidence that flour is the key ingredient, the only one that produce ‘cake value’. Yet all you’ve really shown is that flour is an ingredient in cake and that a bigger cake will tend to have more flour. We know, of course, that flour is just one ingredient in cake and that more of every ingredient will be needed to make it larger. My view is that these correlations shows that labour is a factor of production, but my view is also that production is a complex combination of various factor inputs and labour is not special among them (except for normative reasons — if that’s your view then fine!).

Cockshott’s further evidence: more correlations

One of Cockshott’s accompanying pieces of evidence is to attempt to correlate other inputs with output and to show that they are more weakly correlated. This supposedly demonstrates that alternative ‘theories of value’ like the energy theory of value do not hold up. Below shows the labour correlations again, but along with three alternatives: iron and steel, electricity, gas and water supply, and computer and related activities. You can see that the labour coefficients — blue diamonds — are clearly correlated, whereas the red squares, green triangles, and black crosses do not correlate at all, basically sitting on the y-axis.

This is quite an unusual method: typically when statisticians want to test one cause against another, they don’t correlate them one by one. Instead, they use multiple regression analysis or some other method that includes all variables at once. Cockshott’s approach is a bit like if those who studied wages and education correlated the two, then separately correlated wages with gender, then age, then parental education, and concluded from the largest correlation that said variable was the key determinant of wages compared to the others. Spot the problem?

This test also fails to rebut the BN notion that industry size is driving the results because it may simply be the case that other inputs do not scale with size. Think again of a cake: to make a larger cake, you don’t always simply double the number of each ingredient. You may double the flour but not the eggs, and use the same amount of vanilla essence and baking soda as before. You do not double the time you put it in the oven. That doesn’t tell us anything about the importance of each ingredient, or of the oven, which is obviously crucial.

Finally, there is also Cockshott’s somewhat random choice of other industries. It strikes me that the natural alternative to labour is to test ‘capital’ or a more comprehensive measure of energy used than just bills paid. Generally speaking, his empirics are casual and extremely poorly explained and presented. This is a telltale sign that somebody is pulling the wool over your eyes. I also want to take this opportunity to point out that a correlation of 0.9+ is typically taken as a good indicator of it being spurious.

Simulations

Cockshott just does not seem to appreciate how easy it is to get correlations from data even where no relationship exists. To illustrate this, I used simulations in Stata. It took me at most an hour for me to easily reproduce his pattern of results in an example where the labour theory of value isn’t true. I generated both labour and capital as normally distributed, though labour had a higher standard deviation (3) than capital (1). That part is important, so remember it!

I then generated output:

Y = 2L + 2K + u

where Y = output

L = labour (hours)

K = capital (money)

u = error term (standard normal)

In this equation, the labour theory of value isn’t true. Both capital and labour contribute to the same to output: two times the input amount. There is some random error for demand, luck, innovation or what have you. It’s crude, but it’s less crude than just correlating things one by one as we actually have a model of output.

Value added (or profit) is just V = Y — K — L in this example, from which it follows that V = K + L + u. The correlation between value added and labour was 0.94. The correlation between value added and capital was only 0.36. The results are the same for just output instead of value added. We therefore have exactly the high correlation with labour that Cockshott said was evidence of the LTV, but in a world without the LTV. Furthermore, we have the low correlation with the other factor input, his supposedly conclusive placebo test.

What’s going on?

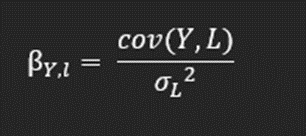

The correlation (ρ) between two variables — say, output Y and labour L — is measured as the covariance of those two, divided by both of their respective standard deviations (σ). In our case, the latter are 1 for capital and 3 for labour, which we chose ourselves. As we ourselves generated Y from K and L, we can also calculate the covariance:

Our linear model (a regression model) multiplies both inputs by 2. Using the above formula to transform regression coefficients into covariances, we find that we just need to multiply the coefficient by the variance (which itself is just the standard deviation squared). The covariance between labour and output is then 2*9 = 18, whereas the covariance between capital and output is only 2*1 = 2.

Since covariance is in the numerator of the correlation coefficient, you can now see why the correlation is so much higher for Labour: it’s a mechanical result of higher variance! (Correlation is divided by the standard deviation, but this is obviously smaller than the variance). Observed correlation patterns can be due to all sorts of factors and this is why statisticians stopped using them to test theories 100 years ago.

Dubious Responses

There is a tendency I have noticed amongst Cockshott and his ilk to try to get pedantic about what is and isn’t allowed instead of accepting this extremely basic point about the irrelevance of simple correlations. For instance, Cockshott has responded to the spurious correlation charges by saying:

The argument that the correlations observed are spurious depends on the idea that there exists an independent third factor that is the cause of concomitant variation in the persons and monetary flow vectors. Any correlation observed in science could potentially be spurious, so this is always a possibility. But for an allegation of spurious correlation to be borne out, one must both identify this third factor and show that it actually does induce the correlations observed. So what could this third factor be?

According to Cockshott, ‘size’ cannot be easily characterised, quantified, and measured independently of labour hours or output. If we cannot do this, we cannot say that the correlation is invalid since what is the third factor driving it?

This is a pretty egregious misunderstanding of what a third factor is. It doesn’t have to be easily measurable: the size of a cake is difficult to measure independently of the volume of its ingredients! To return to our education example, ability is notoriously difficult to measure apart from educational performance and income, and many economists do not accept rough proxies like IQ. Plus, I’m not even convinced people are even saying that size is a ‘third factor’ as such. They’re just saying that thinking about the role of size renders the correlation unreliable as evidence of a causal theory. And the labour theory of value is a causal theory.

Along similar lines, Cockshott has responded to Bichler and Nitzan’s spreadsheet by claiming that it violated ‘dimensionality’ because their units were not consistent. He claims that you can only correlate two variables if they have the same dimensions. He then claims that prices themselves do not have the same dimensions, since the price of a pencil is $/pencil and the price of oil is $/oil. Only when you multiply them by quantities do you eliminate this problem and get only $. Hence the industry-level correlations, which pass the test of dimensionality. This is a very strange reply because statistical analysis frequently correlates/regresses things which are incommensurable, like years of education and money wages. Or the rate of profit and the organic composition of capital, as Cockshott has done himself.

In any case, for me what BN show is not so much that the world is actually their little spreadsheet, but that they only need a little spreadsheet to produce the same result as Cockshott. It’s a bit like if Cockshott said he had the fastest car, then BN beat him in their own car, then Cockshott started pointing out all sorts of problems with their car’s interior. That hardly changes the result; what’s more, if they can beat you then someone else surely can, too. Cosma Shalizi faced similar pushback when he simulated a multifactor intelligence model to show that IQ could just be an emergent phenomenon; critics missed that he didn’t believe his simulations were the correct theory of intelligence. Astonishingly, I do not believe that the actual economy is defined by Y = 2L + 2K + u, either.

It doesn’t help, of course, that Cockshott’s rebuttals usually do not stand up even on their own terms. As Fix has pointed out, saying prices and labour values are incommensurable also contradicts the primary basis for the labour theory of value, which is that prices are commensurable. Prices are literally what makes the capitalist economy amenable to mathematical analysis. Cockshott also tries to argue that BN’s simulated ‘labour values’ have no natural units even though he spends the same paper arguing for the use of labour values measured in hours. Such are the perils of making up ad hoc, sophstical reasons to evade the obvious charge that your statistics would fail a first year undergraduate course — you end up contradicting yourself!

In other words, Cockshott’s response to critics who point out that there are other easy explanations is to say “no, that explanation cannot be true for separate reasons, and my way is the only way to test the LTV”. To be charitable, this may have some legs in some cases, but the net result of all of these interventions is that we’re left with a weak test using aggregate correlations, so all it takes is another idiot with Stata to produce another example that shows the correlation is not a unique test of the theory. We can keep doing this until the cows come home, or we can accept the last 100 years of statistical progress and try to create a causal research design that simply eliminates other possible explanations for what we see.

On this note, my suspicion is that any reply to my post would try to detail how the specific example I simulated doesn’t make sense as a theory of capitalism for some reason or other. This would be a mistake. What I’m showing is that me messing around for half an hour can easily produce the evidence Cockshott appeals to as knock-down evidence for the labour theory of value. This is a strong indication that the statistical result is not that special. Yes, we could spend more time with some other model — a neoclassical production function, an agent-based model, or a complexity framework — and manage to produce similar relationships. This would have the added benefit that we’d believe that model more than my ‘model’. The bottom line, however, is that when it is this easy to produce an alternative explanation for a finding used to support a theory, that finding is no longer conclusive evidence of a theory.

Doing Better

I’m not sure why so many insist on clinging to Cockshott and co., as their contributions are undoutbedly at the bottom of what Marxism has to offer. Nothing they produce even approaches the required level of statistical rigour for falsifying a theory these days. What would be a good test would be to look at cases where labour inputs changed suddenly — perhaps due to weather, policy changes, or global market conditions — and to see how that affected prices, profits, and output. Alternatively, you could make out-of-sample predictions, using labour inputs today to predict output tomorrow. These are both methods used by non-Marxist economists and, if we are going to be Popperian about things, then they fit the falsifiability criteria much better than Cockshott’s approach. It’s not just that I haven’t seen this kind of evidence from Marxists; it’s that I haven’t seen them even acknowledge that they’d need it.

Of course, if you want to say that Marxism and the LTV should not be treated in a Popperian, ‘scientific’ manner, then that’s absolutely fine. Just don’t appeal to existing statistical tests as proof of it. Embrace the fact that Marxism is a lens through which to view capitalism, not a dogma that you have proved — especially when using such shoddy statistical tests.